Cut-throat AI competition, captive resources, Google's AI moat (Update July 21, 2025)

In AI, the story is no different, economies of scale are king.

Note to reader: This is a longer post than usual, bear with me!

“We have no moat”, said an infamously leaked Google memo. The memo’s author was opining on Google’s place in the raging AI race.

I think the memo is right in the sense of AI model development.

For sustainable competitive advantages among AI firms, Google most definitely has a moat: a two-decade head start on the cost of production learning curve (we’ll get to this at the end).

Large language models (LLM’s) are the foundation of modern artificial intelligence, AI. When ChatGPT launched in late 2022, the unintended smash hit was powered by a LLM called GPT-3.5.

Today, these models are trained on the world’s written and recorded information: text, photos, videos, and audio.

Predicting the speed of technology advancement and adoption is notoriously hard. One of the major idiosyncratic risks in venture capital investments is “time”. When will AGI arrive? Who knows?

Small club of frontier AI labs

The universe of frontier (bleeding edge) AI model makers is very small.

In the U.S., you can count these AI labs on one hand: OpenAI, Anthropic, Google DeepMind, XAI, and Meta.

If you included non-U.S. labs and U.S. stealth labs1, the universe perhaps increases by 5-10 firms depending on how you count.

Everyone is building the ~same thing!

Set the scene for the world’s most expensive natural experiment in competitive dynamics.

For every frontier lab, at the most foundational level, the goal is the same.

Build the next frontier (most intelligent) AI model and hopefully reach AGI (artificial general intelligence).

In addition to the “end” product—the AI model—being very similar, the resources “consumed” by the labs are also very similar.

The labs basically need 3 types of resources: (1) research talent, (2) GPUs, and (3) data.

The scarcest resource is talent. The news of $100M offers from Meta and acquihire deals—$2.4B Google/Windsurf deal and $14.3B Meta/Scale AI deal—cement the intense competition and transferability of talent.

Next, GPUs are critical. To train and run AI, the labs need 100,000’s to 1,000,000’s of NVIDIA’s latest GPUs2. To use these GPUs efficiently, purpose-built data centers with sufficient power and connectivity are being built at breakneck speed.

Elon Musk’s XAI built a $10B+ data center in Memphis in ~130 days called Colossus.

OpenAI is partnering with Oracle to build multiple $10B+ data centers across the U.S. Their Wisconsin data center will be the largest single building datacenter in the world.

Finally, the last piece is data. Earlier models use very similar datasets, from the public internet. More recently, much of the model performance gains derive from data creation, evaluation, and generation techniques.

These data techniques are closely guarded trade secrets, as these recipes can easily enable another lab to take the lead. Meta’s recent Llama 4 model stumble can be partly attributed to inferior data quality and techniques.

Captive resources: the race to keep scaling these resources at all costs

The playbook to make frontier models is simple:

Hire many cracked AI researchers

Give them 100,000’s of GPUs that are wired to run large training jobs

Give them the best data in the world to feed the models

Every frontier lab is pushing the pedal to the metal on all 3 levers.

In that case, what are the sustaining competitive advantages for these model makers? Will everyone arrive at the same outcome? Are models simply all going to converge?

Ultimately, AI businesses are no different, delivering intelligence at massive scale will become a firm’s sustainable competitive advantage.

But what does it mean to deliver intelligence at scale?

Economically useful inference supplied

Intelligence is hard to measure. It is relative to the subject task.

For now, the proxy metric can be measuring total tokens processed and generated (aka inference supplied). To be more specific, it is the quantity of economically useful inference consumed by the market. Sorry, building the world’s largest bean counter with GPUs will not count.

As the basic form of AI “production”, the amount of inference equates to the quantity supplied in classic microeconomics.

To understand why inference is so important, let’s breakdown how these LLM’s work.

If you already are familiar, you can skip to the “AI in the wild: Inference at scale” section.

The makings of an LLM: training models

Pre-training, mid-training, and post-training are the colloquial stages of training.

At each stage, the machine learning objectives and algorithmic optimization techniques are slightly different.

Mid-training and post-training stages are some of the most closely held secrets of modern frontier AI labs.

A glimpse into pre-training: the neural networks that make up these models are tasked with predicting the next “word” in a set of “words”.

Next token prediction, as it is called, is the primary objective in pre-training.

The quick brown fox jumps over the __??__

As the model is trained to predict the next token in every sentence available on the internet, the neural network becomes a highly compressed version of the internet.

What does it mean to “train” a model?

During training, each attempt at predicting the next token is called taking a “step”.

In every “step” of training an AI model, three key components come into play:

data sampling (picking a sequence of words from a dataset, and hiding some words)

inference (predicting the hidden words)

optimization (how close are the predicted words to the actual hidden words? update the model to be better next time)

We won’t deep dive into the optimization step in this post, there are many great posts on how it works. Backpropagation is the “magic” in machine learning optimization.

Seemingly very simple, researchers at frontier AI labs like OpenAI, Anthropic, Google DeepMind, and Meta toil over every minute possibility to improve these three parts of training.

The goal is to train an increasingly powerful and intelligent model, at ever greater compute efficiency.

Inference is AI: it is predictions all the way down

When a user prompts a chatbot like ChatGPT or Claude and hit Enter, the model takes the prompt—the input data—and conducts inference to predict the most likely word.

Basically, this is the first two steps of the training process:

Prompt: input data

Inference: generate predictions

Of course, these chatbots are performing more than predicting the next word.

What happens under the hood is the process is continuous (or called auto-regressive). After the first word is predicted, it becomes the new “input data” for the next prediction.

The quick brown fox jumps over __??__

The quick brown fox jumps over the __??__

The quick brown fox jumps over the lazy __??__

The end result is a coherent and intelligent response from the AI.

AI in the wild: Inference at scale

Inference—the actual usage of AI—is the structural form of production from AI, not training.

In May ‘25, Google disclosed that their entire product surface was processing ~500 trillion tokens per month. In April ‘25, Microsoft was processing ~50 trillion tokens per month. OpenAI and Anthropic both have not publicly disclosed their inference supply. According to Google, their tokens processed metric grew ~50x year-over-year.

But if we were to infer from the Google and Microsoft figures, and make some assumptions:

OpenAI’s supply is ~3x Google’s scale.

Anthropic is ~1x Google’s scale.

XAI is ~1x Google’s scale.

Meta’s internal product inference is ~1x Google scale.

All other inference providers (including Microsoft) is ~1x Google scale.

Including Google, total inference supply would be ~8x Google scale.

Today’s inference supplied is probably somewhere around ~5-10x Google scale, equating to around 2,000 to 4,000 trillion tokens processed per month.

Let us assume a more moderate growth rate of 25x YoY (vs. Google’s most recent 50x YoY), then that leads us to some crazy implications.

Imagine if today’s global inference demand requires ~250k H100s3 (very low estimate intentionally). To give a sense of the scale of ~250,000 H100 GPUs, xAI’s Grok 4 model was trained in Memphis on a cluster of 200,000 Hopper GPU’s called Colossus.

Today’s global tokens processed is doubling every ~3 months4!

To continue to serve global demand this year, we’d need to add 25 Colossus data centers in a year’s time.

A different kind of learning curve for scale

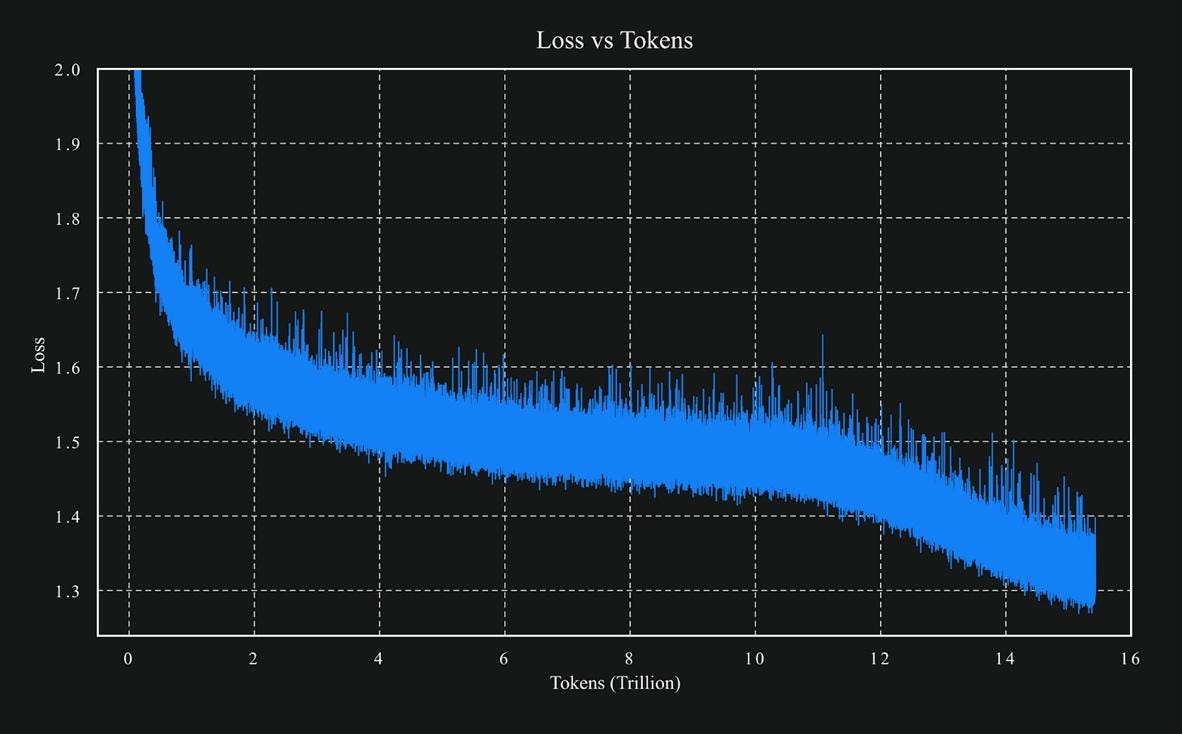

In machine learning, researchers are used to monitoring learning curves. The curves help researchers understand if the model is learning.

As training continues (more training steps with data/tokens), the model should become more accurate in its prediction—meaning the loss (error) metric should go down.

In this curve for the Kimi K2 model, you can see the model gets a lower loss as more tokens are processed in training.

In industrial and manufacturing settings, a different kind of learning curve is used to analyze the unit cost of production as cumulative output increases.

This is an actual learning curve from a HBS case describing one of DuPont’s chemical plant’s unit costs (in labor terms). To reach costs of ~7 work hours per metric ton produced, DuPont had to cumulatively produce ~0.6M metric tons of chemicals over 10 years.

For any new entrant to the market, the only path to achieving similar unit economic cost is to catch up in cumulative production.

In the case of attempting to compete with DuPont, they would have to somehow catch up ~10 years of production.

Google does have a moat: their cost advantages are emerging

When Google’s internal memo leaked saying there is no moat, that is partially correct.

In terms of producing the best AI model, the inputs are commoditized and transferrable (as discussed above as talent, GPUs, and data).

Any new entrant can acquire those inputs (likely at increasing costs as supply dwindles) to competitively compete in model development.

Yet, Google’s moat is not in model development.

Its moat is from serving inference: deploying AI models at scale to the world. This has always been Google’s core mission: using machine learning to organize information.

While Google Cloud Platform (GCP) is a laggard in the hyperscaler cloud business, Google (like Meta) has decades of experience running data centers for machine learning workloads.

Google’s ML workloads are mostly internal, and their data centers are purpose built to be the best infrastructure in the world.

XAI’s Colossus cluster may compete in compute capacity, it is not even close in operational efficiency when compared to Google’s data centers.

Using the industrial learning curve analogy, Google has cumulatively produced over 2 decades of ML workloads. This form of production is most similar to today’s LLM workloads.

The leading provider (OpenAI) has a lot of catching up to do.

While some of Google’s past ML learnings are not directly transferrable, most of their distributed computing learning are likely already being applied at scale for inference.

It is no wonder that their models are at the Pareto frontier of cost-performance for all leading AI models.

Overcapacity: massive stumbling possibility

As OpenAI, Anthropic, and XAI races to build GPU capacity for training and inference, they have a massive stumbling possibility to their economics.

Sudden overcapacity of GPUs is possible. If one of the frontier labs creates a AI model that step-change from the competition, demand can very quickly dissipate from the previous leading providers.

While demand will follow the best supply, GPU’s sitting in data centers cannot.

Tight rope for the new AI labs to walk, managing enough GPUs for research, for inference, while not oversupplying in case of disruption.

Back of the napkin estimates for GPU requirements, it’s likely a conservative estimate.

4,000 trillion tokens per month translates to around ~133 trillion tokens per day.

In a recent DeepSeek disclosure, processing ~780B tokens per day used 1,814 NVIDIA H800 GPUs for their R1 models.

To supply ~4,000 trillion tokens of global inference per month with R1 models, it would require ~310,000 H800 GPUs.

Assuming 1x H800 = 0.8x H100, ~310,000 H800 GPUs would be around ~250,000 H100 GPUs.

However, DeepSeek's inference stack prioritized cost, not latency.

Their inference speed is 2-3x slower than leading providers. In inference, higher speed requires more GPUs.

Accounting for latency, the actual global inference capacity for ~133 trillion tokens per day is likely somewhere between 0.25M H100 GPUs and 2.5M H100 GPUs, depending on API provider's latency requirements.

The wide range of 250k to 2.5M GPUs also helps account for the likely range of model sizes from 2B to 1T+ models.

25 to the (1/12) power, subtracted by 1 is ~30.8% month over month growth.