Something Small is Happening

March of the 9s in the age of computing

Matt Shumer’s “Something Big Is Happening” went viral this week. He’s right about the pace.

On February 5th, OpenAI and Anthropic dropped GPT-5.3 Codex and Opus 4.6 on the same day. The SaaSpocalypse wiped $550B from software stocks. Hyperscalers committed $660B in capex for 2026.

Something big IS happening.

But if you look closer, the progress and change is driven by something small.

Something small is happening.

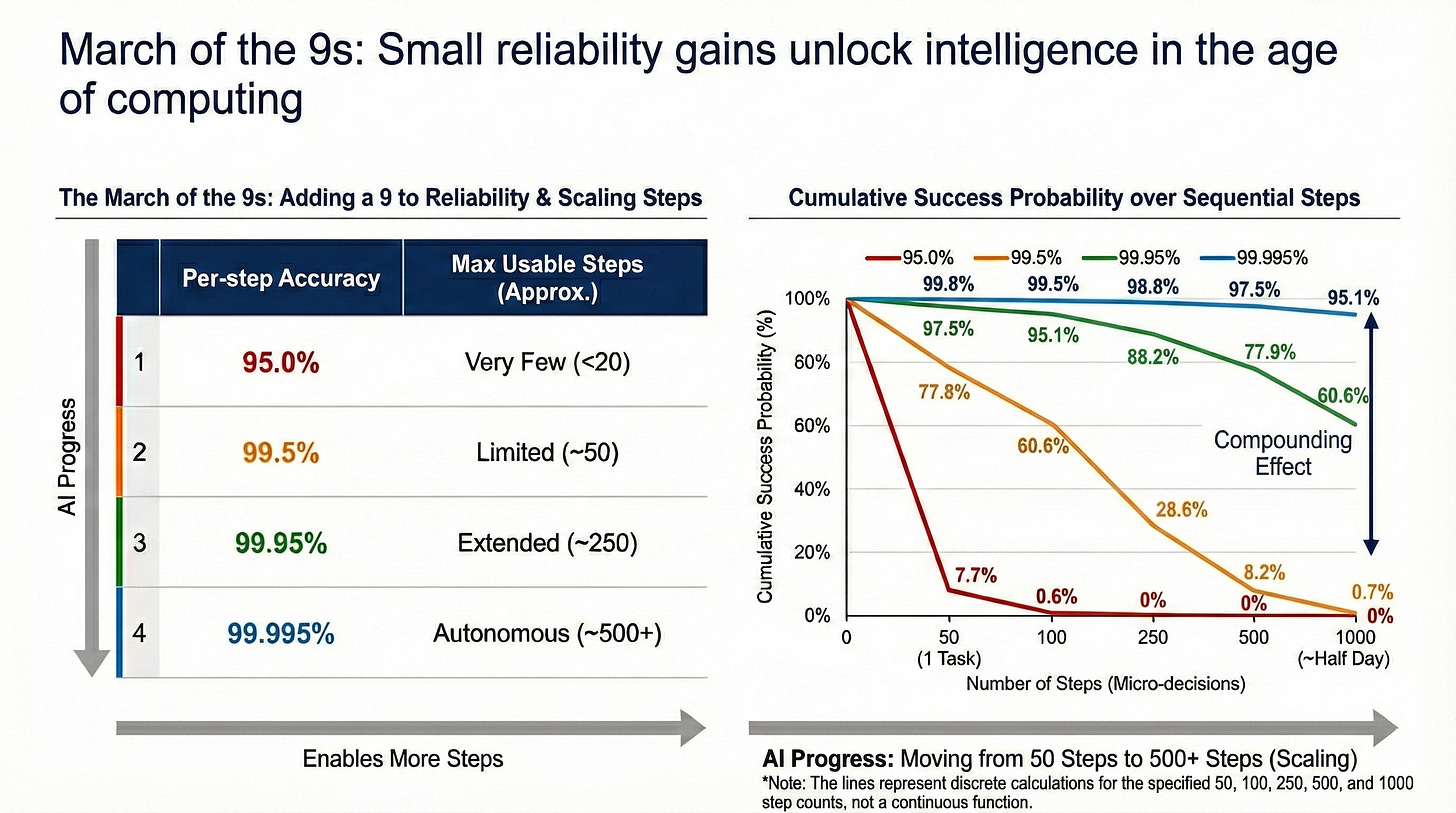

The March of the 9s

A knowledge worker makes 50-100 micro-decisions to complete a single task.

Open the right folder (find the right email)

Search the right term in Google

Click the right result, read it, go back to search page

Save the source, write a note on the research

Draft an intro paragraph to the research brief

etc...

Sequential, contextual, compounding. That is how human knowledge work manifests today.

Here’s the math that matters

Each additional “9” is a small absolute gain at step level reliability. Going from 99.5% to 99.95% is less than half a percentage point.

But over 50 sequential steps, that half-point is the difference between ~78% and ~98% success. Over 500 steps, ~half a day of autonomous work, it’s the difference between total failure and actual reliability.

This is why AI seems to “suddenly” get good. It crossed a compounding threshold.

Code generation by LLMs has existed since GPT-3 Davinci and the original Codex models in 2021. But “vibe coding” didn’t take off until Karpathy coined the term in February 2025. Again, it’s a story of compounding progress.

The story of computing

The $660B in hyperscaler capex is the bill for the next 9, and the next 9 after that. Each 9 requires more compute, more data, more energy. The returns diminish at the step level but compound at the system level.

As I wrote last October:

Power unlocks compute. Compute unlocks AI. AI unlocks intelligence.

Satya Nadella’s framing is instructive:

[…] the company just literally provisions a computing resource for an AI agent, and that is working fully autonomously. That fully autonomous agent will have essentially an embodied set of those same tools that are available to it. So this AI tool that comes in also has not just a raw computer, because it’s going to be more token-efficient to use tools to get stuff done.

But an always-on agent running 500-5000 steps needs more 9s of reliability to work.

GPT-5.3 Codex and Opus 4.6 didn’t shock because they were categorically different.

They shocked because they added another 9.

And at this point on the curve, each 9 is a phase change.