Power & Fab Capacity: Last Jigsaw Pieces in the Dash for Compute (Update Oct 9, 2025)

Modern Alchemy: Energy into Intelligence

Over the past couple of months (Sept/Oct 2025), OpenAI has announced a spate of deals with NVIDIA, AMD, Oracle, Samsung, SK, and many others. The Financial Times and Bloomberg estimate OpenAI’s dealmaking now involves commitments topping $1 trillion dollars.

Rightfully, the financial press and analysts have rung alarm bells of bubble speculation: circular financing, Oracle's 500% debt-to-equity ratio, and Meta’s off-balance sheet special purpose vehicle (SPV).

Even one of the ever-optimistic management consultancies (Bain) has questioned how the estimated $2 trillion needed for AI data centers by 2030 will be funded.

Yet, I predict that dealmaking is not over.

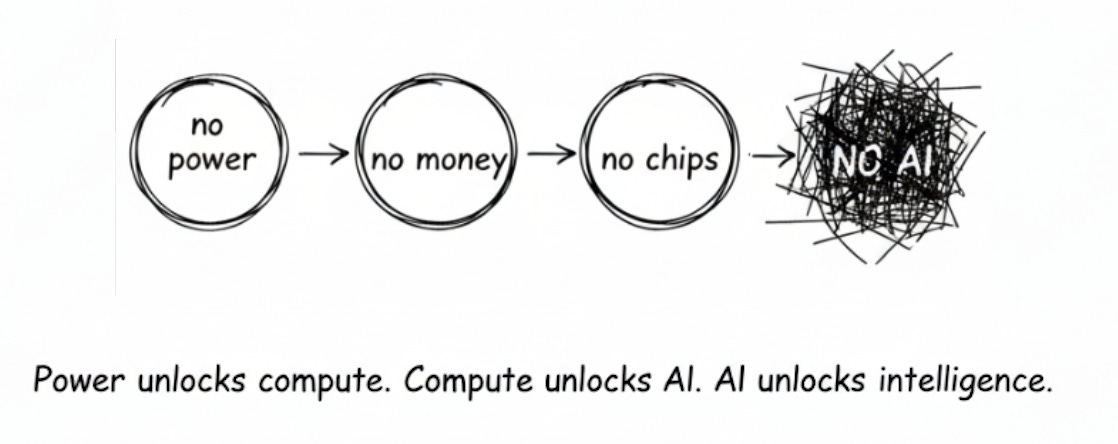

The compute jigsaw puzzle is still missing two critical pieces.

Where are the power and energy deals?

These AI chips will need power. Many gigawatts (GWs) of power. Without it, GPUs are just $50,000 paperweights in a server rack.

Where are the new chip fabs?

As far as we know, TSMC is the primary chip fab service provider capable of mass-manufacturing bleeding-edge AI chips at an efficient volume. Fabs take years to build, and their future production capacity has long been reserved by the likes of Apple, NVIDIA, and Google.

Economically useful inference: Structural form of production in the AI Era

As I have publicly written about before, AI inference (a technical term for an AI model’s process of producing output) is the structural form of production in the AI era.

More importantly, we should care very deeply about attempting to measure the amount of useful intelligent inference over time. This will be the best indicator of AI’s long-term trajectory. However, “useful intelligence” is subjective to the user and the surrounding context.

Meanwhile, tokens processed can be a noisy proxy for understanding the progress toward more intelligence. Without a doubt, this metric is continuing to grow explosively—mostly constrained by the ability of AI providers to match demand.

In May 2025, Google disclosed its AI workload grew ~50x year-over-year, processing around 500 trillion tokens per month across its products.

From my previous estimates:

Today’s global tokens processed is doubling every ~3 months4!

To continue to serve global demand this year, we’d need to add 25 Colossus data centers in a year’s time.

Each xAI Colossus consumes ~300MW of power.

25 Colossus-sized data centers require about ~7.5GW of power, or roughly enough to power ~3x San Francisco-sized cities.

OpenAI’s dealmaking is directly responding to the expected growth in inference demand.

Modern Alchemy: Energy into Intelligence

In Vaclav Smil’s book “How the World Really Works,” he provides a powerful analysis of how human progress is tied to energy consumption.

“An average inhabitant of the Earth nowadays has at their disposal nearly 70 times more useful energy than their ancestors had at the beginning of the 19th century. [...]

Translating the last rate into more readily imaginable equivalents, it is as if every average Earthling has every year at their personal disposal about 800 kilograms (1.8 tons, or nearly six barrels) of crude oil, or about 1.5 tons of good bituminous coal.

And when put in terms of physical labor, it is as if 60 adults would be working non-stop, day and night, for each average person; and for the inhabitants of affluent countries this equivalent of steadily laboring adults would be, depending on the specific country, mostly between 200 and 240.”

In today’s world economy, energy consumption is a critical factor in the goods economy—but less so in the service economy. AI will soon flip this paradigm.

Two quick illustrations:

China, the world’s manufacturing center (~30% of global manufacturing), has grown its electricity generation capacity at a compound annual growth rate of ~8.5% since 2000. In 2024 alone, it added more renewable energy capacity (in 1 year) than the entire installed renewable power capacity of the United States.

TSMC, the world’s primary supplier of cutting-edge AI chips (~90% market share), is projected to consume ~12% of Taiwan’s total power generation by the end of 2025. TSMC contributes about ~8% of Taiwan’s GDP.

Today, most AI infrastructure projects face supply constraints: chips, land, data center shells, networking parts, etc…

But the constraint that overwhelms all others is power.

Power dictates winners in AI

The U.S. has had roughly stagnant power generation capacity for the last two decades. Like much of America’s aging infrastructure, power transmission capacity upgrades have been behind schedule for roughly the same amount of time.

Reading between the lines of the recent NVIDIA/OpenAI and AMD/OpenAI deals, GWs of data centers require GWs of new power generation capacity.

This is the most revealing tell:

NVIDIA: To support the partnership, NVIDIA intends to invest up to $100 billion in OpenAI progressively as each gigawatt is deployed.

AMD: The first tranche vests with the initial 1 gigawatt deployment, with additional tranches vesting as purchases scale up to 6 gigawatts.

The mutually agreed-upon benchmark for progress is to bring GWs of AI data centers online. Therefore, if we track inference as a measure of AI progress, then tracking power usage will be a major determinant of success.

Over all time horizons—the short term (today), the medium term (2-4 years), and the long term (5-10 years)—the story will be the same1.

Power unlocks compute. Compute unlocks AI. AI unlocks intelligence.

Smil’s insights still stand in the era of AI.

How does the world really work? Energy.

Foundry details missing: Samsung/SK & NVIDIA/Intel

OpenAI is planning to add ~20GW of data centers over the next decade. Sam Altman blogged about adding ~1GW every week as a longer term goal.

Today, the cost of AI chips makes up ~50-80% of the total cost of ownership of a new AI data center.

With OpenAI opening a dual-sourcing approach to its supply of AI chips (making deals with both NVIDIA and AMD), the question remains of how TSMC will be able to absorb all of the demand from the chipmakers.

Most advanced AI chip manufacturing is done by TSMC. TSMC produces bleeding edge chips for NVIDIA, AMD, Apple, and Google, the supply is already earmarked.

New fabs take around 19-38 months (~19 months in Taiwan, ~38 months in the U.S.) to be built. Supply will take time to come online.

Samsung is the closest competitor with fabs in Korea and the U.S. (soon to come online) capable of manufacturing bleeding edge chips.

So the final puzzle piece:

Which of the remaining foundries (TSMC, Samsung, or Intel) will attempt to pick up the gap in supply? How quickly can they ramp up production?

Who is going to guarantee their demand if the price of chips fall through the roof (due to increase in market supply)?